Interview originally printed in the Dutch science newsletter “Onderzoek Nederland” (September 2015 issue, nr. 372) and based on an earlier article I wrote myself. In the interview I explain the real risk that getting H2020 funding under the Industrial Leadership and the Societal Challenges Pillars is starting to turn into a lottery. Many researchers – particularly those at the mid-stage of their career where the need to publish is highest – opt to participate in as many proposals as possible hoping that at least one will succeed. The solution to the problem for these pillars is to focus the evaluation not on scientific excellence, but on the participation of industry (e.g. Impact). After all: these specific pillars are meant to solve real-world problems and create real-world jobs for Europe. Only if proposals score equally on Impact the evaluators should consider scientific innovation to make a decision on who gets funded.

Tag Archives: Horizon2020

“Hoe de feedback verdween uit Brussel”

Interview originally printed in the Dutch science newsletter “Onderzoek Nederland” (June 2015). The interview (in Dutch) focussed on the lack and quality of feedback in Evaluation Summary Reports (ESRs) of stage-1 and stage-2 H2020 proposals. Right now, applicants do not get an ESR in stage-1. Why not, if the comments by the evaluators could make the proposal better in stage-2… And why so limited information in stage-2? How is an applicant to judge whether he/she should resubmit the proposal if the suggestions and comments for improvement are so vague? ESRs should help applicants, not confuse them, is my point.

ScienceBusiness conference on future of H2020

Commissioner Moedas talking about Open Innovation during the ScienceBusiness H2020 conference (Feb. 2016)

Last week, Brussels-based ScienceBusiness held its 4th edition of the Horizon2020 conference in Brussels. Central theme of the day was the concept of “Open Innovation” as the way forward in European research.

Commissioner Moedas shared the latest thoughts of the European Commission on the European Innovation Council (EIC). Other speakers (non exhaustive list!) were former CERN-DG Rolf-Dieter Heuer, who is now Chair of the EU Scientific Advice Mechanism and Jean-Pierre Bourguignon who is the President of the European Research Council. Nathan Paul Myhrvold, formerly Chief Technology Officer at Microsoft and co-founder of Intellectual Ventures, compared US and EU approaches to innovation and gave examples of how a more entrepreneurial attitude among the new generation of scientists is already radically reforming our economies and societies. Over 230 people from academia and industry participated in the conference. Several thousand people watched the live-stream!

In a separate workshop I moderated the ScienceBusiness Network meeting, where we shared best practices on H2020 research proposal development and implementation. The Network Members discussed ‘do’s & don’ts’ and identified possible improvements for the next Research Framework Programme (FP9). On behalf of the network I subsequently participated in the panel discussion with Robert-Jan Smits, the director-general of DG-Research at the Commission (see picture above). Smits outlined several changes to the further execution of the H2020 funding call programme as well as some further simplifications in the implementation of project, fow example on the use of timesheets. Smits admitted that despite the overall success of the programme and the endorsement of the application process by the European research community, there are still some significant improvements possible, in particular in relation to the evaluation process. Most Calls will in future follow a 2-stage process, whereby the aim of the Commission is that in the second-stage the success rate will be around 1:3. Following a question from the audience, Smits confirmed that he would re-assess the Commission’s earlier decision to scrap the so-called “Consensus Meeting”. The audience felt that the Consensus Meeting plays an important part in ensuring that the assessment of all evaluation panellists is taken into account before the final ranking. Smits also promised to check whether in future the Evaluation Summary Report can be a little more specific and detailed tan is currently the case.

The video-cast can still be accessed by clicking on this link: http://www.sciencebusiness.net/events/2016/the-2016-science-business-horizon-2020-conference-4th-edition/

Remarkable conclusions from High Level Expert Group on Economic Impact of FP7

(Please note that the article below is a personal opinion and does not reflect the view or position of any organisation I am working with/for).

(Please note that the article below is a personal opinion and does not reflect the view or position of any organisation I am working with/for).

Three weeks ago a High Level Expert Group published the ex-post evaluation of the 7th Framework Programme for Research, the predecessor of Horizon2020. The pan-European programme ran for 7 years, spending just about €55 billion euro across a multitude of interlinked research programmes. According to the report, 139.000 proposals were submitted, of which 25.000 actually received funding.

Whilst this report confirms what a great number of other reports have already said about the programme’s ability to advance science and create international collaborations, its assessment on the economic impact of the programme is – to say the least – remarkable.

Let’s start with a simple point. In report-chapter 6 called “Estimation of macro-economic effects, growth and jobs”, the Expert Group relies on two prior reports (Fougeyrollas et al. in 2012 and Zagamé et al. in 2012) to estimate that “…the leverage effect of the programme [stands; RP] at 0,74, indicating that for each euro the EC contributed to FP7 funded research, the other organizations involved (such as universities, industries, SME, research organisations) contributed in average 0,74 euro”.

What a statement to make: after all, everybody knows that in order to get access to FP7 funding, each organisation needed to provide a substantial cash or in-kind contribution. The Expert Group then continued by arguing that “…the own contributions of organizations to the funded projects can be estimated at 37 billion euro. In addition, the total staff costs for developing and submitting more than 139.000 proposals at an estimate of 3 billion euro were taken into account. In total, the contribution of grantees can be estimated at 40 billion euro”. Again, considering that organisations were required to chip in at least 30% of their own money to access the 70% of FP7 funding, one can only conclude that this amount was – in effect – a donation by these organisations and should not be confused with Actual Impact of the FP7 programme, as the Expert Group would have you believe.

But there is more: Using this flawed argument, the Expert Group subsequently draws the conclusion that: “The total investment into RTD caused by FP7 can therefore be estimated at approximately 90 billion euro”. Huh?! So if I take the €50 billion of FP7 given by the Commission and I add to that the contribution of applicant successful organizations (as the EC doesn’t fund 100%), I end up declaring that FP7 has contributed €90 million to the EU economy?! I don’t think so. Clearly, when doing their calculations, the Experts considered researcher salaries as the main metrics. This is confirmed in the report, as it says: “When translating these economic impacts into job effects, it was necessary to estimate the average annual staff costs of researchers (for the direct effects) and of employees in the industries effected by RTD (for the indirect effects). Based on estimated annual staff costs for researchers of 70.000 euro, FP7 directly created 130.000 jobs in RTD over a period of ten years (i.e. 1,3 million persons‐years).” If that’s the case, then why did we bother with FP7 at all… you could have achieved the same economic result by introducing a simple European tax reduction programme for employed researchers.

In fact, rather than the Expert’s grand conclusion that: “Considering both ‐ the leverage effect and the multiplier effect ‐ each euro contributed by the EC to FP7 caused approximately 11 euro of direct and indirect economic effects”, I would take the numbers to mean that thanks to the leverage effect and the multiplier effect, the European Commission managed to convince organizations during FP7 to give away 11 Euros for each Euro which it put into FP7. Furthermore, contrary to the Expert Group’s conclusion, I would at least argue, that their multiplier should then also have taken into account all the hours spent by applicant consortia writing proposals that did not get funding although they were above the funding threshold. As I showed in a previous article, the value of those hours amounts to roughly 1/5 of the total H2020 budget. In simple economic terms: their hours in service of the European economy could have been spent better.

FP7 wás a success: like no other research programme, it encouraged researchers from academia and industry alike to consider and test new ideas and start working together. The problem with FP7 was that ‘knowledge deepening’ did not (semi-)automatically mean that research results were then taken up by industry and translated into technologies and innovations. And that’s what FP7 to a large extent aimed to do. So in that sense, the only conclusion I can draw is that FP7 was a good effort. It’s most important achievement for me was that it paved the way for a new kind of thinking inside the Commission which put concrete and measurable impacts for science and society, for jobs and competitiveness at the centre. H2020 is the result of understanding that FP7 had some design faults which needed correcting. All the more interesting to read that Robert-Jan Smits’ (DG for Research at the Commission) solution for stemming the current tsunami of proposals now being submitted to H2020 is to put even more emphasis in the evaluation process on expected – measurable – Impact results. Go Commission!

H2020: Time to evaluate the evaluators?!

A few weeks ago the European Commission published a list with all the names of evaluators they used during the first year of H2020[1]. The list contains the names of all evaluators and the H2020 specific work programme in which they judged proposals (but not the topics!). In addition it shows the “Skills & Competences” of each evaluator as he/she wrote it into the EC’s Expert Database.

It’s not the first time the EC publishes this type of list, of course, but the publication comes at a poignant moment. As success rates for submitted proposals are rapidly declining and many researchers are wondering whether it is still worth developing anything for H2020 at all. In previous articles I already wrote about some of the main reasons behind this decline and possible solutions.

In this article I want to focus on the actual H2020 evaluation process, as for many researchers it is becoming very frustrated to write top-level proposals (as evidenced by scores well over the threshold and oftentimes higher than 13.5 points) and then see their efforts go under in short, sometimes seemingly standardised, comments and descriptions. Is it just the brutal trade-off between the increasing number of applications versus a limited budget, or do the evaluation comments and points hide another side to the process? Why are some people starting to call the H2020 evaluation process a ‘lottery’? Is there some truth to their criticism? How can the European Commission counter this perception?

I am sure most researchers have nothing against the EC’s basic principle of using a “science-beauty contest” to allocate H2020 funds. There is a lot of discussion however – also on social media – on selection process. Part of that discussion concerns the level of actual experience and specific expertise of those who do the selection. Simply put: Are the evaluators truly the most senior qualified experts in the H2020 domains they are judging proposals on, or is a – possibly significant – significant part of the panels comprised of a mix of younger researchers – using the evaluation process to learn about good proposal writing – and mid-level scientists who are good in their specific field, but also have to evaluate proposals that are (partly) outside their core competence?

Before I continue, please accept that this article is not intended in any way to slack off the expertise of people who have registered on the FP7 and H2020 Expert Database and have made valuable time available to read and judge funding proposals. I myself am in no position to make statements about what constitutes sufficient research expertise to be acceptable for peers when judging their proposals. The only point I will be making below is that if the EC wants to keep the H2020 evaluation process credible (and not stigmatised as a ‘lottery’), it needs to demonstrate to the community that it is selecting evaluators not just on availability but on their real understanding of what top-class research in a given research field means.

So let me continue with my argument: H2020 specifically states that it is looking for the most innovative ideas from our brightest researchers and developed by the best possible consortia. If that is the case, knowing that you cannot be an expert-evaluator if you are part of a consortium in that same funding Call, then already quite a few of the “best possible experts” will by default have disqualified themselves from participation in the evaluation process. It is also widely known that not all top-level experts want to involve themselves in evaluation either because to them it is not sufficiently important or because of other (time-)constraints. As a result, groups of typically 3-5 evaluators will typically consist a combination of real experts in that specific research domain and other evaluators who come from adjacent or even (very) different disciplines. So part of a given evaluation committee may therefore consist of – for lack of a better word – what I call ‘best effort amateurs’. Again: I have no doubt these are good researchers in their own field, but at this point they may be asked to judge projects outside of their core-competence.

Now you might think I am making these observations because I want to build a quick case against the evaluation process as it is. That’s not true. What I said above are views and comments that have been made by many researchers, on and off-stage, both from academia and inside industry. It’s thát feeling of unease and not knowing, that contributes to the more general and growing perception that H2020 is turning into a somewhat lottery-type process. For that reason alone, it is very important that the Commission now shows that their choice of combinations of evaluators is based on specific merit and not on general availability.

One way to refute the perception of a lottery, is – in my mind – for the EC to perform a rigorous analysis (and subsequent publication!) of the scientific quality of the people it used as evaluators. How to do that? A start would be to assess each evaluator against the no. of publications ánd the no. of citations he/she has in a given research science field. You can include some form of weighting if required to allow for the type and relative standing of the journals in which the publications featured. The assessment could also include the number of relevant patents of researchers. This should give a fairly clear indication on the average level of research seniority among the evaluation panels. One issue when doing this, will be that very experienced evaluators from industry may have fewer published articles, as the no. of publications is often considered less important (or even less desired) by the companies they work for. So the criterium of publications and citations should be applied primarily to academic evaluators. The same thing essentially also holds for patents, as ‘industry patents’ often are registered and owned by the company the researchers work for (or have worked for in the past). In other words: the analysis will probably not deliver a perfect picture, but as academics make up the majority of the evaluation panels, it should still give a fairly good indication.

Next step could be to check if the evaluators were actually tasked to assess only projects in their core-research domain or if they also judged projects as ‘best effort amateurs’. The EC could do that my matching the mentioned publications/citations/patents overview against the list of “Skills & Competences” the researchers listed in the Expert Database and the specific H2020 topics they were asked to be an evaluation panel-member in. Once you know that, you can also check if the use of ‘best effort amateurs’ happened because there just were not sufficient available domain-specific top-experts, or if the choice was based on prior experience with or availability of particular evaluators. I would find it strange, should that be the case. After all: the EC database of experts exceeds 25.000 names. The final step – in my view – would then be for the Commission to publish the findings – the statistical data should of course be anonimised – so that the wide research community can see for itself whether the selection of proposals is based on senior research quality linked to the H2020 proposal domain, or not.

I am sure that I am not complete (or even fully scientifically correct) in my suggestions on how to analyse the H2020 evaluation process. Things like the number of proposals to score and the average time spent on reading individual proposals will propoably also have an effect on scores. So what is the time-pressure the evaluators are under when they do their assessment and are there possibilities to reduce that pressure?

Please take this article as an effort to trigger further discussion that will lead to appropriate action from the EC in avoiding that our top researchers start dismissing H2020 as a worthwhile route to facilitating scientific excellence.

So European Commission: are you up to the challenge? If not, I am sure that there will be somebody out there to pick up the glove… I will be most interested in the results. Undoubtedly to be continued…

[1] http://ec.europa.eu/research/participants/portal/desktop/en/funding/reference_docs.html#h2020-expertslists-excellent-erc

‘Impact’ should be leading evaluation criterion in funding proposals under H2020 SC and LEIT

In last week’s interview published in the Dutch science newsletter “Onderzoek Nederland” (September 2015 issue; click here to access the text of the Published article) I argue that the only way to keep the Societal Challenges (SC) pillar and the Industrial Leadership (LEIT) pillar viable, is to change the order and emphasis of the proposal evaluation. Instead of “scientific excellence” as the first evaluation criterion, emphasis should mainly be on which proposals have the most meaningful “industry participation” (e.g. Impact), including a detailed commercial roll-out exit strategy. After all: these specific pillars are meant to solve real-world problems and create real-world jobs for Europe. Only if proposals score equally on Impact, should – in my view – the evaluators consider scientific innovation to make a decision on who gets funded.

Now many may argue that science comes first, otherwise you do not have anything to manufacture or build in the first place. I don’t necessarily disagree with that view, but if you take a close look at what the expected results listed in the proposal Calls are, then it is clear that SC and LEIT are essentially about applied research and not so much fundamental resarch, for which there is a separate pillar. Combining existing science and technology and applying them through prototyping and demonstrations is what companies do best. If they participate in projects in a serious way with their own knowledge and capacity, I think the evaluators can safely assume there is a real-world demand and a route to that market. So if proposals are judged on the Impact-criterium first (or with a heavier weighting compared to the other sections), then many proposals which start from scientific curiosity can quickly be filtered out of the evaluation process.

Yes, you might say, but that will only cause more pressure on the third H2020 Pillar (Scientific Excellence). I agree that this will probably happen, but in that specific funding pillar it is only good and natural that only the absolute top X% get funded. That’s what scientific curiosity is all about! At the same time I also fully support the view of many that national governments should restore (and even increase) national funding for scientific research to pre-2008 levels as well, so that the research phases between fundamental and applied are also covered. I think there was never a realistic chance that H2020 could cover and finance all aspects of European research. It cannot replace national funding so the responsibility for maintaining Europe’s scientific excellence must lie mostly with the national member states.

The interview – which is in Dutch – also covers a few other possible ideas to improve H2020 success rates, among which the suggestion that proposals that do not even meet the EC’s threshold (on any of the three evaluation sections) could be told they cannot resubmit their proposal for two years. My argument for this is that even if the resubmission proves better than the first-time submission, these projects are unlikely to shoot from below-threshold to the top 10%-20% that are so good that they have a realistic chance of being funded.

H2020 FET-Open: 1,8% chance on getting funded

A former colleague of mine , Frederik Vandecasteele, sent me the submission and success statistics on the latest FET-Open call for proposals (FETOPEN-2014-2015-RIA). The results are outright painful and cannot for a minute be presented by the European Commission as further evidence of the success of the H2020 programme and the evaluation system. Have a look:

- topic budget for FETOPEN-2014-2015-RIA: €38.500.000 (deadline 31/03)

- total budget requested for above-threshold proposals: €1.078.991.003

- average requested budget per proposal: €1.078.991.003 / 326 = €3.309.788

- estimated number of fundable projects: €38.500.000 / €3.309.788 = 12

- number of proposals submitted: 670

- number of above-threshold proposals: 326

The estimated chance of success to get funding under this Call was: 12/670 = 1,8% !!!

(data source: http://ec.europa.eu/research/participants/portal/doc/call/h2020/h2020-fetopen-2014-2015-ria/1665116-fet_open_flash_call_info_en.doc)

FET-Open was always a competitive programme, but this is getting ridiculous. I wonder whether it would not be better to shut down the programme for a while, so all those hard-working researchers can spend their time on useful things rather than on writing proposal after proposal that go nowhere. How can not even 2 projects of every hundred submitted – and all above threshold ! – projects contribute to maintaining Europe’s leading role in global science??

The numbers do not say anything about who is getting funded. They only show that too many people are competing over too little money. Assuming that the member states are not willing or able to significantly pump up the H2020 programme budget, the only solution that I see is a fundamental rethink of the number of themes/domains that are being funded. H2020 is presently trying to satisfy everybody’s needs and achieving almost none of it… It is time to re-prioritize if Europe wants to have any chance at remaining a science leader in … something…

Making this type of hard choices will undoubtedly be difficult, but if nothing is done soon, then ultimately the H2020 programme will make itself irrelevant to the advancement of science.

H2020 – how to avoid that its success becomes its failure

So now we know: in the first year of the H2020 Research & Innovation programme roughly 45.000 proposals were submitted for funding. According to the European Commission’s Director-General of DG RTD, Robert-Jan Smits, the funding rate has dropped from 19% at the end of the predecessor programme FP7 to a mere 14%. This is well below recently published average success rates in the United Stated (NSF fund: 22-24% and NIH fund: 18-21%[1]) or Australia (NHMRC fund: 21%[2]). Should we worry or is this proof of the programme’s popularity and success?

Let’s put some overall numbers to this: if indeed only 1 in 7 proposals were selected (several H2020 sub-programmes like Marie Curie-Sklodowska and the SME Instrument have an even lower success rate of 5% and 11% respectively), this would mean that roughly speaking 38.700 submitted proposals were rejected. From our own figures as an innovation and grants consultancy with 20 years experience in EU funding, we know that on average a collaborative single-stage costs between €70.000 and €100.000 in own time and effort for a consortium to develop and write. Assuming that half of the Calls were divided in 2 stages – and let’s agree that realistically speaking 70% of the total project development time and effort goes into developing and writing the stage-1 proposal and 10% is then moved on to the next stage – this implies that overall between €2.5 and €3 billion was spent in vain by applicants. And this will go on every year, adding up to a little more than 20% of the total H2020 budget. Ergo: yes, it is time to start worrying.

Before continuing my line of argument and proposing some structural improvements, I should be clear that I fully support the H2020 programme as a high-quality innovation programme and we should praise that in Europe we have developed structure that encourages and facilitates cross-border research and innovation in the way that it does. Each framework programme has built upon ‘lessons learned’ and from an administrative-bureaucratic point of view, H2020 is arguably the most sophisticated and easy-to-use of them all. However, as researchers perceive that national funding is becoming more rare due to unpredictable economic changes, their view is now much more firmly set on Europe with its €79 billion ring-fenced R&D fund. This appears to have created added pressure to submit proposals to H2020, whether they are well suited for the Call or not and irrespective of their objective level of research and innovation quality.

To give but one example: the recent stage-1 Health Call PHC-11 was very specific on the need for the innovative in vivo imaging tools and technologies and should make use of existing high-tech engineering or physics solutions or innovative ideas and concepts coming from those fields. A total of 348 proposals were submitted in stage-1. How likely is it that there really were 348 different significantly new and improved imaging technologies out there? Even if in every EU Member State we had 10 different high-quality research combinations of academia and industry and each had – coming from the engineering and physics community – a completely different approach to solving the challenge, we should still not have arrived at 348. We have many examples like that. Something appears not to be quite right.

In fact, looking at the many 2-stage proposals my company has been involved in recently, I dare argue that what used to be a normal 1-stage proposal in FP6 or FP7, is now a 2nd-stage proposal in H2020. To clarify: the results thus far from the evaluation process makes one wonder whether the H2020 1st stage is not used by the Commission as a quick fix to deal with the increasing amount of proposals. Stage-1 is no longer used to select the best proposals selected for admission to a competitive stage-2, but just to dismiss all the proposals that on face value to not match. Stage-1 has become an instrument of discouragement and not of finding and helping to improve intrinsic quality of the proposals that go on to the next phase. Does that really matter much?

Of course it does! It means that anyone who has made it to the 2nd stage cannot, for a minute, think that his/her proposal is intrinsically good or has a real chance of funding. No, it just means that you are now on the basic level playing field with other proposals in the same way that you were if it were an FP7 single-stage process. There is still a very good chance that the Evaluation Summary Report (ESR) of the 2nd stage proposal will say that your proposal is not innovative, the consortium is mediocre or that the expected results are not very marketable. Instead of having an average 1:3 or even 1:2 success chance in stage 2, the chances of successfully making it ‘all the way’ are – in many domains and on average in the different sub-themes – much worse. My plea would be to either drop the 2-stage approach altogether, or make clear that reaching the 2nd-stage really means that you have either ‘gold’ or ‘silver’. What should happen to the ‘silver’- proposals I will discuss later in this article.

Back to my previous point: a few weeks ago, a survey among Dutch researchers showed they spend 15% of their research time just on writing national and EU funding proposals. In their opinion this is far too much. In addition to the complexity of setting up collaborative projects, the decreasing success rate is making them more sceptical of the whole funding application process. In this context two issues always emerge: one is about the objective quality of the evaluations and the other is on the success rate of re-submitted proposals.

To start with the first point: you would not be the first to feel that comments on the ESR sometimes appear to have little to do with the project you submitted or that the evaluators seem to not have read the proposal in great detail or are not fully ‘au-fait’ with the state-of-play in the field. Commission assurances about the quality and fairness of the evaluation process are continually frustrated by the intended secrecy of the evaluation process itself. One does not know, so one quickly feels that a bad result is undeserved. Also consider this: it is no secret that academics tend to be heavily represented in the pool of evaluators. From having submitted 140 proposals for our clients in the first H2020 year across most domains and sub-programme’s, we at PNO have found that projects that were industry-led or have a large industry-contingent in the consortium have suddenly fared much better than proposals in which research organisations or universities were dominant. Not necessarily surprising if the Commission happens to have instructed its H2020 evaluators in the spirit of Mariana Mazzucato’s 2013 publication “The Entrepreneurial State”. There she states that “Successful states are obsessed by competition; they make scientists compete for research grants, and businesses compete for start-up funds—and leave the decisions to experts, rather than politicians or bureaucrats. They also foster networks of innovation that stretch from universities to profit-maximising companies, keeping their own role to a minimum”. There does not have to be a significant causal link, but what if a stronger focus on ‘profit-maximising Impact’ has taken those same evaluators a little out of their comfort zone to the level that projects showing a high industry participation and high ‘profit potential’ (with profit not just meaning financial profit, but referring to commercial replication, transferability and job creation) are scored higher, whilst more ‘academic-focussed’ projects are judged just a little harsher. Again: all we can do is speculate, but as the Commission does not really provide a clear insight, the general feeling among applicants is one of uncertainty. On the other hand, we should also admit and praise the fact that over the years the overall quality of funding proposals has gone up. That by itself is a testament to the professionalism and the dedication of all those same researchers and evaluators.

That brings me to the second point: years ago the Commission introduced an eligibility threshold below which a proposal is rejected. That eligibility threshold relates to the whole proposal, but can also refer to specific section within a funding proposal. In itself a good idea. Then there is something called a cut-off threshold, which in effect is the division of the scores against the available budget for that Call topic. The cut-off threshold is thus different for each Call topic. Now imagine that the cut-off is at 92 points (out of 100 in FP7) or at 4.40 (out of 5 in H2020) and your project scored 91 points (FP7) or 4.25 points (H2020)? Your project will not get funded. Is it a mediocre or possibly even bad project? No, your project will be far above the eligibility threshold and is – by all scientific and market-relevance standards an excellent piece of work. Still: no money…

The obvious approach – assuming you agree with the ESR – is to take its comments to improve the proposal and resubmit it in one of the next rounds of Calls. Sadly, re-submissions do not do well in evaluation rounds, not even those with scores that were within inches of making the cut-off threshold the first time. Resubmissions are usually scored by a different evaluation panel which may hold a very different view from the previous one, not seldom resulting in a new score on innovation, impact or implementation strategy which may even be lower than the old score. FP7 and H2020 programme rules allow the Commission’s responsible project officer to let the new evaluation panel know the proposal is a resubmission and the panel could have access to the old ESR. The decision appears to be up to the individual responsible project officer. Anecdotal evidence from Commission representatives and from evaluators is that this almost never happens, let alone that the evaluator could/would check the actual text of the old proposal to establish whether the ESR-requested improvements had been implemented or not. A missed opportunity, I believe. After all: ESRs often provide applicants with helpful suggestions to further improve a near-successful proposal. If the implementation of those comments is not seen by the new panel, then why provide those helpful comments in the first place one might wonder. There are no public figures available on the success rate of resubmissions, but academic and industry organisations that have come to us with resubmission projects are already very sceptical about the evaluation process itself. But why care? After all: there are still enough high quality projects that do get funded..

Ah, here we touch on a fairly sensitive point which goes directly to the heart of the aims of Europe’s research & innovation programmes and which I would like to illustrate by using a very recent example. In fact it is one example of several in the past 3 years. Imagine a promising young researcher who submitted FET proposal which would allow Europe in future to gain a significant competitive advantage in a commercially attractive market whilst delivering a significant contribution to a specific Societal Challenge. The evaluator’s comments in the ESR are just fantastic: great science, even better impact and a top implementation approach. But still, the proposal missed the cut-off threshold by a whisker. Naturally the young researcher is very disappointed. He already has a research offer from a major US university and was only waiting for the results of this proposal to decide whether or not to stay. His decision is now clear: ‘bye bye Europe’. By not having in place a proper alternative structure for those very high-quality ‘near-misses’ of which there will be more in the future if the success rate keeps on dropping, Europe may lose a much larger contingent of its most promising researchers. We then need to throw even more money at H2020 through the Marie Curie-Sklodowska programme to try and seduce them to come back. That’s not very efficient.

In a recent interview new Commissioner Moedas showed he clearly understood that the H2020 programme may be facing a credibility crisis if the cost-benefit of writing project proposals and the way that project that just fail to achieve the cut-off threshold are not better dealt with. So if the Commission recognises that a problem might be ‘on the horizon’ (pun intended), then let’s try to do something about it.

One of the new ideas is to facilitate that proposals that fall between the eligibility and the cut-off thresholds are ‘transferred’ to the national level, where they could be funded out of the much larger European Regional Development Fund (ERDF) and possibly include Interreg as well. After years of financing mostly physical infrastructure to improve regional job creation and economic growth, ERDF is now also being positioned as a real innovation programme at national and regional level. As the failed ‘silver’ H2020 proposals already have a ‘seal of approval’ from Europe on their technical quality, Moedas suggested, why not let the ERDF take care of business?! He is right, of course. ERDF, and to some extent also Interreg, is an excellent funding instrument, in particular for those projects where the link between the innovation is very closely linked to he development of a particular regional development plan. In other words: it would almost certainly fit perfectly for projects that just missed the cut-off threshold in programmes like the SME Instrument. There you generally have a single applicant located in a specific location. It would be do-able to have the regional government set up a dedicated fund to support this type of high class research in their area.

The biggest pitfall for this idea lies in using ERDF for international collaborative proposals. There the management of the funding process will run the risk of becoming hopelessly complex, not to mention create an explosion of additional national bureaucratic procedures. The Eurostars programme is a programme which already operates in a similar way as proposed, but that is for a fairly narrowly defined type of projects where the national governments of the participating consortium members, supported by the EUREKA secretariat, must agree on whether to fund their part of the project out of available national funding. Not seldom has a Eurostars project been delayed or even cancelled because of the decision by one Member State that their funding priority was elsewhere. The 2010-interim evaluation of the Eurostars programme has also highlighted this problem and the programme evaluators clearly stated that “…the national differences in procedural efficiency and in procedures themselves remain unacceptably high. Further improvements are necessary to impose common eligibility rules”. Now imagine this for H2020 projects, where the number of consortium partners and the level of funding requested and the technical (reporting) complexity is significantly higher. ERDF may be a good instrument, but only if the role and responsibility of the supporting secretariat is beefed up substantially and the rules are procedures between countries are much more aligned. And then what do we have…

So now what?!

H2020 is – and probably remain – the best game in town as far as research & innovation funding is concerned. The problems to address concern the cost-benefit of preparing proposals and the credibility of the evaluation. This article does certainly not pretend to deliver a full-on solution for these problems, but I hope some of the ideas may trigger other to engage in further discussion. So here goes:

On the application process itself:

- In a 2-stage evaluation process, the 1st stage should be evaluated in a way that makes it clear to applicants why their project is promoted to stage 2. This means that evaluation reports with concrete suggestions by the evaluators should be given to the applicants that continue the process. The selection of stage-2 proposals can be made tougher as long as the evaluators have clearly described their arguments and the success rate of a stage-2 proposal is moved up to at least a 1:3 chance.

- I also believe that it is in the European Commission’s interest to bring more transparency to the evaluation in order to maintain credibility in its functioning. If the number of proposals continues to rise (or the success rate drops further), this is especially important with regard to proposal re-submissions. Here the Commission should – as a standard measure – provide the new evaluation panel with the old ESR and the old proposal. Right now it is largely left to the Commission’s project officer to decide whether to provide the new panel with the old ESR. Project officers should in future ensure that the new evaluation is consistent with the old one, making it unlikely that the new score falls below the old one. There is no new legislation needed for this. It is just a question of willingness on the Commission’s part to come up with a better time-table for evaluators.

On alternative funding for high-quality failed H2020 proposals:

- I fully support the idea of facilitating single-applicant SME-type project that failed in the SME Instrument to access ERDF funds, because it is also in the direct interest of the competent authority to let the company create new jobs from a project idea that has already been technically vetted by the European Commission. In fact: why not go a step further and move the whole SME Instrument programme to the ERDF?

- I believe that an ERDF-type of approach for multinational collaborative proposals is not the way forward. Instead I would plead for a revision of the evaluation structure. One way could be for the Commission to periodically define technology priorities for a limited number of domains and let the community of those domains define the topics and set up the evaluation structure. This approach will generate a higher buy-in from researchers within that community and encourage participation in the evaluation process. In other words: ‘let them govern themselves’ to a certain extent. Aspects from the organisation structure of the different existing PPP’s and Technology Platforms could be taken as a good starting point. Other experiments in which ‘domain communities’ are created and which focus on ensuring a fully open and transparent access of stakeholders to topic selections and a trusted peer-review evaluation process – are also being planned and look promising. It’s will not be the answer for the problems of today, but it could be part of the solution for the day after tomorrow.

On managing expectation:

- If many more examples like the PHC-11 stage-1 Call appear over the next year also, then there must be an ‚expectation-gap‘ between a fairly large group of applicants and the European Commission. It is understandable that applicants want money to fund their research and H2020 appears to them as the proverbial ‚pot of gold‘. But let’s be real: projects scoring well below the cut-off threshold are just not good enough for H2020 and there is a good chance that (recurring) submissions of projects in the same research direction will continue to fail. That message should be brought to those applicants much more clearly than is the case now: „Don’t submit (again), because it is not what we are looking for“. That message should be presented to prospective applicants in a much earlier stage in the process, maybe as a screening-service by the Commission’s national contact agencies or other services. That does not happen now; ideas are screened but prospective applicants are not told to „just drop it“ if the project concept is not up to standards expected by H2020. Instead false hope is given that with sufficient fine-tuning there is still a chance. Proper project-screening combined with a well-founded opinion by the screening-authority on whether to submit culd help ease the current deluge of proposals facing the Commission and the evaluators in new Call rounds.

Over the past years the Commission has shown that it is slowly letting go of its very top-down structure of R&D and innovation management. In H2020 topics are now less specific deterministic and detailed than – for example – in FP6. Maybe now is the time to take a next step. For H2020 let the Commission take more technocratic decisions based on true ‘societal need’ rather than political decisions based on having to satisfy everybody’s aspirations. Every 2 or 3 years the Commission should define the societal challenges and technology areas with the very highest priority. Then the relevant communities – comprising the chain from fundamental researcher to technology provider and end-user – should come up with the appropriate Calls. If the peer evaluation can be properly managed by the community itself, the level and quality of expert-participation in the evaluation may just rise and create more acceptance from the people aiming for the next breakthrough.

[1] Ted von Hippel and Courtney von Hippel in PlosOne, March 4 2015.

[2] Danielle L Herbert, Adrian G Barnett, Philip Clarke, Nicholas Graves in BMJOpen, 2013. The data related only to NHMRC Project Grant proposals in 2012.

Are we unintentionally destroying H2020? Waking up to a new reality.

Over the past weeks I have come to realise that collectively we may be on our way to destroying a large part of the European H2020 innovation programme. Not on purpose, but still.

Background to this rather ominous statement are a number of meetings I had over the past weeks with researchers and internal grant managers from different universities and research organisations across Europe. I assessed their comments on when, how and why they join proposal consortia against recently published stats on the number of proposal submissions and success rates across some of the H2020 sub-programmes during the first year of operation. My conclusion: the H2020 ‘Societal Challenges’ and ‘Industrial Leadership’ pillars suffer from the so-called ‘prisoner’ dilemma’. The only one holding the key to the dilemma is… industry!

In the prisoner dilemma – originally a concept from game theory and often used in economics and political sciences – two prisoners who are kept apart, are each told that the other will soon confess to a crime (for which the police have no evidence) which will result in them getting all the blame. The best objective option for both prisoners would be to keep their mouth shut. After all: there is no evidence. On an individual level, however, each prisoner has most to gain by talking first and blaming the other in order to minimise their own punishment.

Let me adapt the prisoner dilemma to what appears to be going on in H2020 to show you why I am concerned about its future:

Europe’s Research Framework programmes were originally set up to foster and financially support the highest quality research we have in Europe. In particular in the early days – and I would stretch that period up to the end of FP6 – time -investment by proposal developers was high as they really tried to come up with original approaches and high-end research targets. Proposal selection was also tough and this helped to keep the number of submitted applications under control. After all: it cost too much time and energy to develop proposals that would not be funded anyway, especially since there was sufficient national and regional funding around as well.

A new copy/paste mentality appears to have set in

Now we are in a very different situation: national and regional funds are much less available as a result of the recent economic downturn. At the same time, many more organisations have become ‘proposal-savvy’, meaning that they have at least understood how to put which building blocks together in order to get to a ‘decent’ quality proposal. You can see that clearly in the much larger number of submitted proposals over the past year that are above the threshold, but not making the funding-cut (which is largely determined by the available money within the call). A widespread copy/paste-mentality also appears to have set in: failed proposals are more quickly being rehashed or stripped to act as building blocks in new proposals. As a regular reviewer of proposals from my clients (and from other proposals in which my clients are a partner) I sometimes see whole sections copy/pasted from other proposals, a few of which I even wrote the first time. This process is facilitated further by the fact that the European Commission really has made a good effort over the past years to structure and simplify the application process itself. Thanks to the revamped Participant Portal, the proposal templates and the detailed guidelines, the act of proposal writing has never been so easy… Alas, that’s not where the challenge is anyway. The challenge lies in original thinking and being sensitive to what the world’s consumers/patients/stakeholders/end-users/governments really need and want.

“…Why not join, never mind the quality…”

But most of all – and here I come back to my discussions with the researchers and university grant officers – it looks as if researchers are becoming very pragmatic in their approach to funding in the ‘Societal Challenges’ and the ‘Industrial Leadership’ pillars. Instead of only wanting to develop or participate in what they consider the very best innovative projects and consortia, they are starting to join other people’s proposals left, right and centre. After all: writing a page as a partner in someone else proposal doesn’t cost much time if you can make use of what was already produced before. In view of the published average success rate for H2020, the attitude seems to have become one of “…Why not join, never mind the quality. If I participate in a lot of proposals with minimal necessary input, then still one or other may score after all”.

That attitude might on first sight be an approach for someone who treats H2020 as a mere lottery; it’s all in the numbers. However, the comments I am picking up point to a different rationale for this increase in undifferentiated participation in H2020 proposals. Especially more ‘mid-level researchers’ – constantly under pressure to publish in order to keep their position – appear to be thinking: “The number of submitted proposals is rising fast and the success rate is getting lower and lower. This can only mean that others (e.g. researchers) are already treating H2020 like a lottery and must therefore be starting to care less about the quality of the project proposals they participate in.” This is essentially what the prisoner-dilemma is all about: “I will do it before the other(s) do it…”

Commission is downplaying the problem: a new tool instead of a real strategy

In a recent interview with Brussels based ScienceBusiness magazine, the European Commission appeared to recognise there is a problem, but they immediately played down any serious discussion by arguing that the new 12% average success rate is further proof of the programme’s success rather than a sign of a structural weakness in its design and implementation. Their main fear, according to the article, is that the large cohort of ‘mid-level researchers’ looking for EU-funding will end up crowding out the 10% top-level researchers who see their ideas and efforts go under in the sheer number of proposals that have to be reviewed and the unknown research-quality of the people that have to evaluate them. If they drop out, then H2020 really becomes a lottery and the EC’s flagship becomes a farce.

So what is the Commission doing about this? Basically the core of their new approach is to implement the 2-stage application strategy across most or all of H2020, with the addendum that evaluators will be instructed to act really tough. Proposals that get through the first round should in future have a 1-in-3 chance to get funded, instead of 1-in-8 or less as is now the case. A laudable approach, but will this solve the problem? I fear not, as the 2-stage process is just a tool and not a real strategy. In fact, for Europe’s taxpayers it might even decreases H2020’s return on investment. After all, the time-investment of all these researchers in preparing all those 1st-stage proposals is still the same and so is the time and money lost. Many of these proposals, whilst maybe not the most innovative, would still create jobs and opportunities if given the chance. In a much more strict 1-stage assessment, these projects will simply not happen. Given what I said before about the ease of rehashing proposals, I could also imagine that proposals are submitted ‘on-and-on’ in the hope of an off-chance success to get to the next round. This would create a merry-go-round of proposals that can only end in a collapse of the whole evaluation system. As a preventative measure I would at least also implement add submitted proposals that made it over the threshold but did not qualify for funding cannot be submitted for at least 2 years. That will force these researchers to either look again very hard for funding elsewhere or to reassess the quality of their work and think really hard before making a new effort. The consortia who fail the threshold altogether should be told much more direct that their proposal is simply not good enough and that they are advised not to resubmit this or a similar proposal again. “Wanna be tough, then act tough!” I say. H2020 is not an instrument to gain research credibility; it should be an instrument of already high-profile researchers that set the European research bar even higher.

Industry holds the key! Impact before Scientific Excellence

It will be clear to you that I find the Commission’s remedial emergency action not very impressive or imaginative. To me, the way out of the prisoner dilemma lies in going back to what H2020 was meant to do: foster innovation! Innovation is only innovation if the technology that you invented or improved actually makes our lives better either because we want something (demand) or because it solves a societal need. For that you need industry. They are the only ones that take a significant financial risk when they develop new things of which they hope and expect there is a commercial demand.

To stem the tide of submitted proposals and bring back some form of normality in the success rate levels, I would therefore be much in favour of changing the H2020 proposal template chapter so that the Impact section comes first. Only if Impact (i.e. quantifiable future demand or need and a clear approach to meeting that demand/need) can be convincingly demonstrated based on a summary-description of the scientific excellence, then it is worthwhile asking the consortium for a full proposal. This approach works; just look at the results of the evaluation of proposals submitted in the H2020 SME Instrument!

In stage 2 proposals the Impact section should receive even more weight during evaluation. Adding 1.5 points is not enough. If a project really solves a big societal problem or if a technology really has the potential to put Europe in the lead in a new area, then this should take prime spot; higher than Scientific Excellence. That this should probably not even be an issue as ‘Big Impact’ tends to happen because of ‘Significant Research’ anyway. So why not add 3 points to the Impact section if a proposal scores over the threshold?! That’s a statement which will at least force every consortium to think really hard before submitting a proposal. The best yardstick will be a strong presence (>50%) of industry in the consortium.

At least the Commission appears to have understood that projects that do make it through stage-1 evaluation should not be left in the dark about their weaknesses and improvement potential. Until now, no evaluation feedback was provided after stage-1. I have always found this strange. After all, it is in everybody’s interest to see that those proposals have achieved their maximum potential at the second evaluation round. Especially where it concerns Impact. So why not help them by showing them what the evaluators thought was already excellent and what should be improved upon?! If the interview statement is to be believed, then in future project consortia will see the stage-1 Evaluation Reports and will be able to take valuable comments and criticisms on board before they are judged in round-2. At least that’s something, but come on Commission: take some real action to save the H2020 programme, before its intended success becomes its downfall.

Please note that when I speak about H2020 in this specific article, I explicitly exclude the H2020 ‘Excellent Science’ pillar and the ERC. These were created specifically to foster fundamental research and ‘research curiosity’ and put less emphasis on (commercial) Impact, even though they still may result in significant Impact further down the line.

Give failed H2020 ‘SME-INSTRUMENT’ grant proposals a second chance !

For an SME, the statistics on getting European public funding are not that great. One of the ways for the European Commission to increase interest and participation of SMEs has been the creation of a special ‘SME Instrument’ within the overall H2020 framework programme. Only SMEs need apply! The question I am raising here is: does Europe’s economy get maximum benefit from this programme, or is the Commission missing a vital element?!

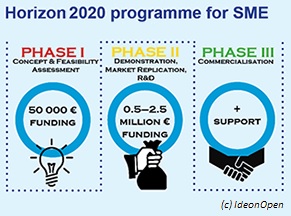

Apart from the many hours that go into writing a credible funding proposal and completing the forms in a programme like the H2020 SME-Instrument, the competition itself during the evaluation of proposals is fierce. The SME Instrument is divided into 3 sections: in ‘Phase-1’ the (starting) SME requests funding for the development of a business plan toward the actual technical development of a product or service. ‘Phase 2’ proposals relate to the actual research and technical development of a product/service up to the level where it is almost ready for market introduction. ‘Phase 3’ (commercial introduction through special loans) is not really in full operation yet.

Before I come to my point, here are some numbers: in its first year of operation (June 2014-March 2015), a total of 8542 funding proposals were submitted under ‘Phase 1’. Roughly 8.6% made it to funding. To be more precise: of the 8542 proposals, only 874 came ‘above threshold’, meaning that they met the minimum quality-level to be considered for funding. Of those, 2/3 were then ranked high enough for the EC to offer them the money (stats were presented by the European Commission during the March 24 ScienceBusiness conference on the success of the H2020 programme).

For ‘Phase 2’, the number of proposals submitted was relatively low. Most likely reason is that the scheme is still new and not many companies sufficiently understood the structure in which projects needed to be framed. A total of 1823 proposals were submitted of which 312 were ‘above threshold’. 134 of those were offered the grant, so about 43%.

So what’s the issue? Consider this: out of the 874 projects ‘Phase 1’ proposals that were considered to have an excellent business idea for which there is a real market and the pathway from idea to product is well defined, 282 still did not get the EC grant in the end. And what happens to these highly innovative – failed – ideas as far as the Commission is concerned? … Nothing! If the proposers are not discouraged by the rather complicated application process, they can of course resubmit their proposal. But there is a time-lock on doing that: you cannot resubmit your idea in the very next round. For a (starting) SME, this is terrible! Markets move fast and ideas are developed and/or snatched up elsewhere within the blink of an eye. From the perspective of Europe’s economy, it’s even worse: each of these proposals was judged by experts to be innovative with the potential to give Europe a competitive edge. Each of these proposal comes with a real prospect of creating new jobs; jobs that Europe really needs right now.

One might say that ‘as far as Europe is concerned’, the Commission is doing an even worse job with regard to ‘Phase 2’ proposals: despite the higher funding success for projects above threshold, out of the 312 proposals that would bring business concepts to the stage at which companies can start thinking about mass production and hiring extra staff, 178 were dismissed. To the Commission I would say: “Are you serious??!”

My point and suggestion to the Commission is that it should urgently find a way to better capitalise on the fact that through its rigorous technical evaluation so many innovative projects have been discovered. Although I personally believe this statement holds true across all of H2020, it is particularly true for the SME Instrument because – to me – there a fairly simple solution at hand given the fact that most/all proposals are submitted by single applicants rather than cross-border consortia.

My view is that the Commission should send failed (but above threshold!) proposals directly to the political region (e.g. province, bundesland, department or similar) in which the SME is located. After all it is there that the company will grow and most likely create these new jobs. So it is in the direct interest of the region to know about these initiatives and support them.

Having been an elected regional politician myself for eight years, I believe I can say that this is exactly what these regions are looking for. In fact, they will most certainly be delighted that the Commission has already taken care of the really difficult bit, namely the technical evaluation of the innovation and delivery capacity of the company in question. I would also argue that every political region has its own ‘pot of money’ ready to support regional economic innovation initiatives, either directly or through a regional Economic Development Company or Chamber of Commerce. And then there is also the European Fund for Regional Development (EFRO) which is being repositioned anyway from funding public infrastructure toward funding innovation. One might also consider the Interreg programme as a potential funder of a regionally located project where – for example – it touches intra-European borders. What I am arguing here for is in fact a move that should fit in particular EC Commissioner Frans Timmermans in his drive to deregulate and ensure that EU policies are linked much more closely to what is needed nationally and regionally. My suggestion is neither rocket-science nor particularly difficult to implement (which is not the case for cross-border consonsortia-applications, where you would run the risk of duplicating a fairly cumbersome international Eureka/Eurostars bureaucracy).

So, dear Commission: please give high-class – but failed – proposals in the SME Instrument a second chance by sending evaluated proposals directly to the regions concerned. For them, this could be an important new approach toward their own regional development strategy. For you as Commission it would strengthen your position as broker and custodian of European innovation and job creation. A win-win for all!

This opinion article is a follow-up on an article I wrote in May 2015 in the Brussels’ ScienceBusiness magazine. That article can be found by following this hyperlink.